The past two months I have been working on cleaning up the home network and streamlining my backup processes. Anyone who has accumulated PCs and hard drives over time knows that data creep can overburden your network. I used to split drives into a number of partitions to keep data segregated. Over time some partitions became maxed while others were barely used. Some didn’t even make sense anymore (FAT32 partition anyone?). I don’t follow that strategy anymore (root folders segregate enough for me now) except that my operating system is always(!) on its own partition.

This was a spring cleaning exercise brought on by the slow death of my backup server. The machine was basically eight years old, with four 320GB drives and one 1TB Samsung. It was normally on 24/7 but was powered down prior to our Disney vacation. It didn’t want to come back up. After some work, I resuscitated it so I could be certain of what was on each drive. I ordered replacement parts; a new MB, an AMD X3 455 CPU, and 8GB RAM. The case was fine and the power supply was less than eighteen months old.

Investigating the drives

Hard drives are no longer as cheap as they had been mid-2011 and prior. In addition, since Seagate took over Samsung’s drive manufacturing, there hasn’t been enough data to determine whether that is a good, or bad, thing. As a result of these two factors, I chose to use the drives already I already had on-hand.

Choosing to not expand storage meant I really needed to get a handle on what was stored, and where. During the investigation of the backup machine’s drives, I discovered multiple copies of the same data, on different drives. On paper each drive was listed, along with what was on it (including the spaces being used for each). This gave me a clearer picture of whether there were data on the backup machine that was not really backed up data but rather original information. Fortunately there were only a few instances, primarily family video work from back when the machine was being used as my primary desktop. Everything else was a copy of live data found elsewhere on the network.

Reorganize

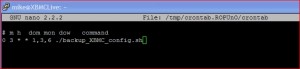

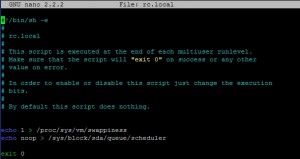

Because I did not have a drive large enough to move everything to wholesale, I spent the better part of three weeks shifting data around. In the end, I removed three net drives from the backup server, and am directly protecting more data than before. My entire openfiler SAN, which has continued to grow in importance, is now being backed up via CIFS, rather than backing the vm up. A single script backs it up nightly and, should the need arise, a single script can rebuild the entire SAN from scratch.

What the reorganization did was help identify and eliminate needless redundancy in the backups, and to ensure nothing was being missed that needed backed up. It was silly how much clutter had accumulated across the entire network and this process ended up freeing a large chunk of network space. Once the network was cleaned up to my satisfaction, I turned my attention to what, and how, to better protect everything.

Identify important data and classify

The first thing I did was identify data that was irreplaceable. Family family photos and videos, and scanned items fell into this category. These would be the most highly protected.

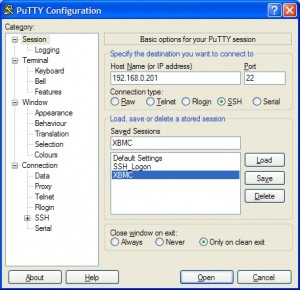

The second category were items that could be recreated, but would be extremely time consuming. The FLAC files from my CD archiving project was such an example. I do not ever want to go back through this process, so this data needed protection and a copy stored offsite. The same with the DVDs I have been slowly archiving to the network (and the originals boxed away in the basement) for playing on the XBMC box.

Other data was labeled as being nice to have, and I would prefer not to lose, but not the end of the world if it were lost. Utility installation executables, old DOS games, and notes on various projects over the years are some of my examples.

Backups of the VMware virtual machines were less important. An online backup would be fine in case of vm corruption. Eventually though, once harddrive prices have come down and quality gone back up, the most recent backup of each virtual machine from the NFS datastore would be desirable.

Ghost images of our primary PC are not terribly valuable in the case of fire. I’d have to replace all the physical hardware so the image wouldn’t be useful anyhow. They are saved to the network but are not copied offsite.

Downloads and temporary files obviously fell into the final category that could just be ignored. If they were lost, oh well.

What to protect against

I wanted to protect against accidental deletion, corruption of the primary data, fire/tornado, and theft. Each required a different strategy.

Accidental deletion/corruption is easily implemented. I’ve been using this strategy for years. I have a series of scheduled tasks that backup data nightly. Most run on my primary machine but a couple do run on the backup server itself. Plain old reliable XCOPY and Robocopy handle these tasks. This data is saved to the backup server. I do not sync my backups; it is add or update only. If I delete a file, it will remain on the backup unless I specifically go and delete it.

Theft of hardware is another disaster that needs defended against. TrueCrypt handles all my data encryption needs. It is fantastically secure and uses very few system resources. If a thief decides to steal the PCs, they’ll have a (mostly) bootable machine but nothing else. All data requires the password (quite lengthy bunches of gibberish) to be mounted. All backup drives are likewise protected. This might be considered a bit paranoid but if drives end up in other hands, I like to know they can’t access the bajillion family pictures on them. I also like having everything encrypted for the possibility of a drive failure and needing to send it in under warranty. Fortunately this has never been required but one has to assume it is inevitable.

To protect against fire, I have a two layered approach. The first layer involves periodic backups to external drives that are stored in a fire and waterproof media safe on premises. The safe promises to keep the contents under 155 degrees in a fire, which would be fine for the drives. This step has to be done manually and currently this is about every two weeks, but really needs to be more frequent. I also have copies of the data offsite. I periodically refresh drives that are housed offsite, in addition to burning DVDs of recent data in the interim and sending them offsite, too.

I have considered backing data up to the “cloud” but have ruled it out, at least for most of my data for now. The price is just too prohibitive and the fact that Comcast effectively caps monthly bandwidth to 250GB of data (unless I moved to a business account) means that it would take many months to get my data pushed up. It is far cheaper (and faster) to use DVDs and harddrives stored offsite, and have a fireproof media safe at the house for the more current backups.

These strategies continue to evolve but I thought it might be helpful to others to provide an overview of how I protect my data.