Review

In part one of the ESXi build I covered researching compatible hardware for the VMware machine. The post ended with an assembled PC. This post will cover the installation of ESXi 4.0 on the machine, the vSphere client, and the creation of a datastore.

Format the Hard drive(s)

Something that I forgot to mention during the hardware post is that I ended up needing to format the new drives as EXT3 rather than using the pre-formatted NTFS. When I initially tried to install ESXi, no drives were found, despite there being two physical drives present. I formatted the drives by simply booting up an Ubuntu Live CD that was lying around, and using gparted to format them. I initially split the first drive into two partitions (old habits die hard), but that ended up being undone by the ESXi installation. Just format the drive(s) as one partition of EXT3 or EXT4; Vmware will recognize it then and format it the way it wants.

Update Drivers/Download software

Now that you have the hardware assembled, it would be the time to update any drivers. I updated the Intel NIC’s driver on my Gigabyte motherboard.

Once you’ve updated the drivers, you’ll need the ESXi ISO image. Visit the VMWare site and register for your free download and license key. Write down the license key (in two locations, as it is important) from the top of the download page and then download the ISO. You might as well save yourself some time and download the vSphere client while you are here, too. Burn the ESXi ISO to CD. Choose a slower burn rate to ensure a good read.

The Installation

Change the PC’s BIOS, if needed, to boot from the newly created CD. Slap the ESXi install CD into the drawer, and restart the machine. Once it boots, you’ll see the following screen:

Press ENTER to begin the install, then PF11 to accept the EULA agreement. You’ll then be prompted to choose which drive you want to install ESXi on. I selected the first. The install will create some logical partitions behind the scenes, and then leave the rest for a VM datastore. Press Enter, then PF11to start the install. When it has finished, remove the CD and reboot the machine. I kept the boot order the same for the time being (CD, then HD). ESXi quickly boots up.

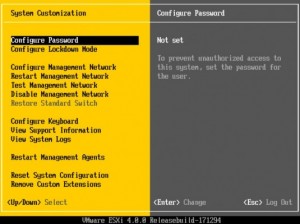

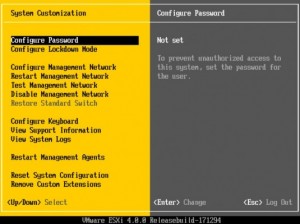

Press F2 to Customize the System. The first thing I did was create a nice long password. I also configured the management network and set a static IP address.

SSH

You will need to enable SSH on the server.

1. Alt-F1 (Note: As pointed out below, you will not see your typing on this screen, just trust me, it is there).

2. “unsupported” (Note: This is not echoed to the screen.)

3. Enter the root password

4. “vi /etc/inetd.conf”

5. delete the “#” from ssh (i for insert, backspace over #, ESC, colon, wq, ENTER)

6. “services.sh restart” (Note: if that doesn’t work, try: (ps aux | grep inetd and then kill -9 <PID>))

Relocate physical server

At this point, I no longer needed to have direct keyboard access to the new server, so I powered the machine down. After disconnecting the keyboard and mouse, the machine was moved across the basement and placed up on the shelf next to the backup PC that was humming away up there. I connected the power to the APC UPS, and ran a short Cat5e cable from the server to the gigabit switch located right there between the two PCs. I was able to then power up the machine and go back across the room to administer it from my Windows box.

vSphere Client

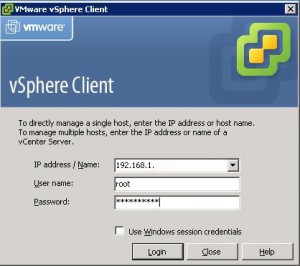

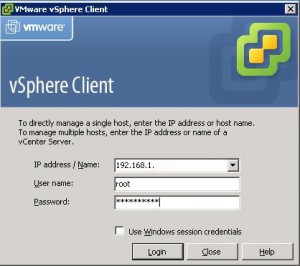

In order to administer the ESXi server, you will need to install the vSphere client on a Windows box on your network. It requires .NET 3.5, so you’ll need to grab that, too, if you don’t have at least that release installed. I installed the vSphere client on my primary PC. It took a while so be forewarned it might be best to go grab something to eat while it is doing its thing.

Once the client has installed, you can fire it up. You’ll get a logon prompt. Enter the IP address of your ESXI host (the static address from earlier), the username of “root” and your password.

The first time you start up the client, you will likely receive a security warning. Click the checkbox, then click Ignore.

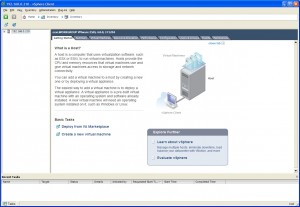

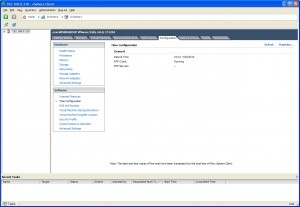

Once the client is open, you will see this screen:

Now is a great time to enter your ESXi license key. Click the Configuration tab, then the Licensed Feature link on the left side, under Software. Click Edit for license source. Click Use Serial Number. Type in the license key number you were given at the VMware download page, and follow the additional prompts to disable the ESX Server Evaluation.

Create datastore(s)

You will need to create a datastore to house your virtual machines, and to hold your installation ISOs. You’ll be using the ISO files for machine installations as loading from an ISO is just so much faster than with a physical CD drive.

Select the “Configuration” tab from within the vSphere Client, and then click the “Create New Datastore” link to start the “Add Storage” wizard.

Leave the default “Disk/LUN” option selected. If you have more than one disk in your server, select which one you wish to use. If you only have one disk, or have selected the boot disk you installed ESXi on, you will see there are already a number of partitions taking up about 1GB of space. This is the ESXi installation so you don’t want to overwrite it! Select the “Use Free Space” option and click Next.

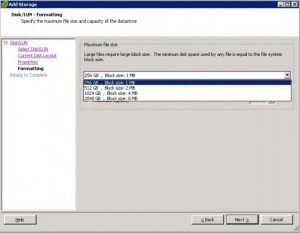

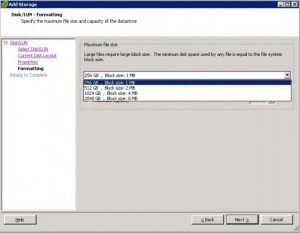

Enter a suitable name for your datastore (I used the unimaginative DataStore1 as mine), then click Next. On the next screen you’ll have to choose the block size for your datastore.

Choosing a block size when creating a VMFS datastore

I want to take a moment to discuss block sizes and how they impact the maximum size of a virtual disk. When you create a datastore, you specify the block size for that datastore. Once you create the datastore, there is no way to go back and change block sizes without reformatting (and losing any data) the datastore. The following are your choices:

1MB block size – 256GB maximum file size

2MB block size – 512GB maximum file size

4MB block size – 1024GB maximum file size

8MB block size – 2048GB maximum file size

I chose to stick with the default 1MB with both of my datastores. The next physical drive I add will likely be created as 4MB. I encountered an issue with needing more than 256GB of storage for my OpenFiler installation (fortunately I could just add multiple 256GB virtual disks to a single pool) and don’t want to run into a similar issue down the road. These maximum sizes are good to keep in mind when you add a new datastore.

Click Next. Check your settings. If all is well, click Finish and wait for ESXi to do its thing. Eventually the new datastore will appear in the host summary.

Network Settings

There are many ways the network can be set up. A production machine would have at least two physical NICs and VLANs. My home network does not require that redundancy, so I left the settings as default.

Network Time Configuration

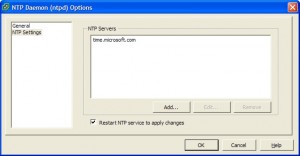

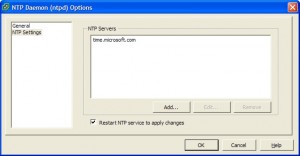

You’ll want your server to have the accurate time so we’ll set that up. Click Time Configuration.

Then click Properties.

Click Options. Select NTP settings at the left, then click Add. Type in your time server, or just use Microsoft’s.

Click OK. Checkmark the Restart… , and then press Ok until you are back at the main configuration screen. You’re now set on time.

Veeam FastSCP

You can browse datastores and move files to and from the server that way. I’ve found that to be the simplest (no extra software to worry about) but some ESX users have found that method to be slower than they would like. There is a free product available that my office uses, and I have installed at home as well. I’ve not much experience with it but figure it would be another option if you encounter slow transfers. It is Veeam FastSCP.

Backup your ESXi configuration

Now that you have your ESXi server set up, it might be nice to backup its configuration. In the event the ESXi host dies, you can quickly recover the configuration from the file you created. I cannot personally vouch for its effectiveness, but it cannot hurt to have this safety net in place. Download the Backup Configurator and install it. Run it and follow the prompts to create the file of your settings. Save this file somewhere safe (not on the ESXi box, obviously). It will be extremely small.

That’s about it for how I installed ESXi and set up the VSphere client. I have greatly enjoyed having the server. Having a dedicated machine available for running virtual machines is a liberating experience when you are used to VirtualBox on your primary PC. I keep the VSphere client running all the time, and can pop into a running machine’s console at any time. When done, I just close the console and the vm continues to run, consuming no resources on our PC. It’s very nice. The geek in me enjoys it.

Filed under:

Esxi by mtrello_Blog