Introduction

All the datastores on my ESXi box are local currently. In order for me to effectively back up virtual machines, an external datastore was needed. I chose to use Network File System (NFS).

None of my virtual machines are mission critical but I do have several that would require some effort to reinstall and get back to where they were. I could manually backup the vms as necessary but realize that automated tasks have a far greater chance of getting done than relying on memory or manual tasks. Automation has served me well in the past. This document covers how to set up an NFS share on a Windows machine, and how to create a datastore on ESXi linked to it.

Obtaining the group and password files

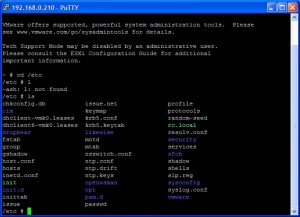

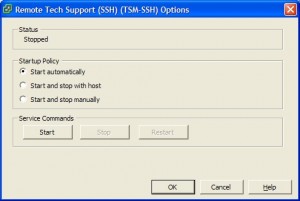

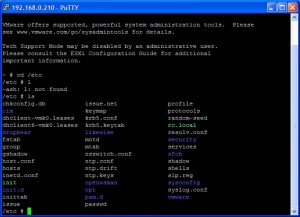

Setting up NFS requires the group and passwd files from the ESXi server to be located on the NFS Windows machine. This requires a connection to the Tech Support Mode command line interface.

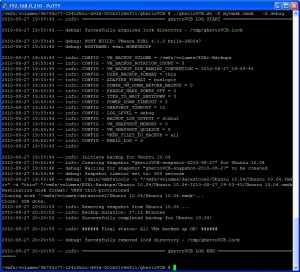

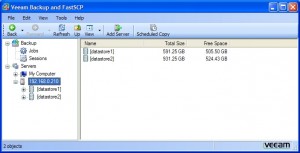

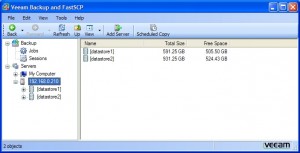

Start up Veeam Backup and FastSCP, then select Tools -> PuTTY.

Log on with the user and password. I chose to copy the files to the ISO folder on datastore1 to make it easy to move elsewhere.

1. Type ‘cd /etc‘.

2. Type ‘cp passwd /vmfs/wolumes/datastore1/ISOs‘.

3. Type ‘cp group /vmfs/wolumes/datastore1/ISOs‘.

I then copied these files to my Windows machine, named DUALBOOTXP (right-click -> Copy in Veeam’s Explorer windows).

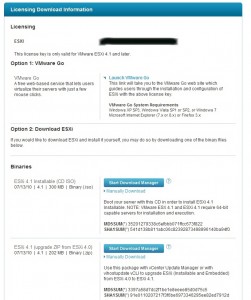

Installing Windows Services for UNIX (WSFU)

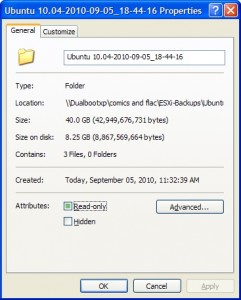

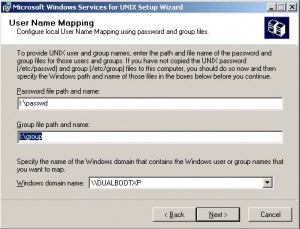

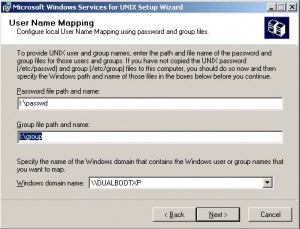

I downloaded Windows Services for Unix 3.5. NFS was to be set up on my backup machine, an older single-core machine with four hard drives. There was plenty of available space on a 1TB drive, so a folder was created on it named ESXi-Backups. I installed both the NFS client and NFS server on the designated machine (DUALBOOTXP). The locations of the passwd and group files from above are needed during the installation.

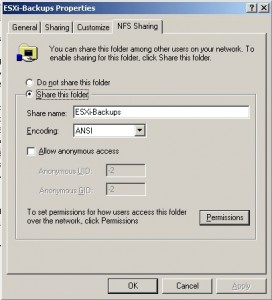

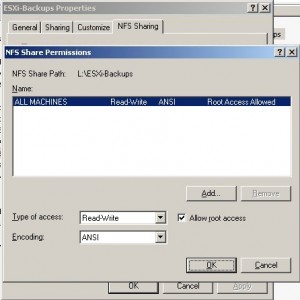

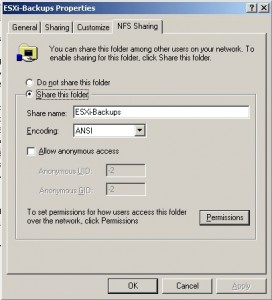

When installation is complete, you can right-click on the folder, select Properties, and you’ll see a new tab (NFS Sharing) is now available. Click on that tab and share the folder off. I named it ESXi-Backups.

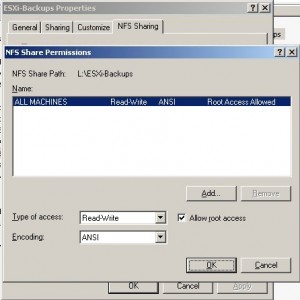

Select Permissions. Change type of access to “Read+Write”, and select to allow root access.

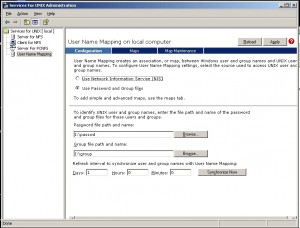

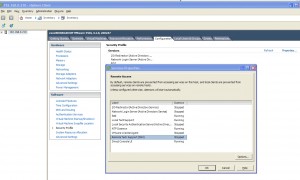

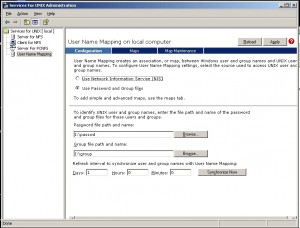

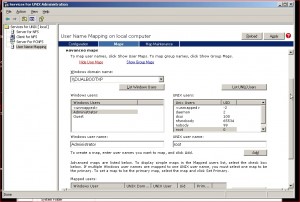

Now go into UNIX Administration by START -> Programs -> Windows Services for UNIX. Select User Name Mapping.

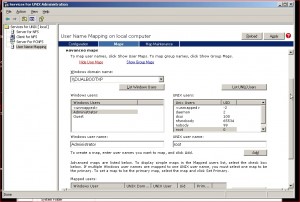

Click on Maps. Click on List Windows Users and List UNIX users. Highlight Administrator from the Windows side, and root from the UNIX side. Click Add. Click OK to each question. Then click Apply.

Open Port 2049 in your Windows Firewall [IMPORTANT ITEM #1!]

Not doing this wasted a good thirty minutes. NFS datastore creation always failed until I disabled the firewall. Suddenly it worked. A quick Google search showed that you need to open port 2049 for NFS servers. I reenabled the firewall, opened the port, added the datastore and everything worked fine. But I get ahead of myself.

Create an NFS Storage VMKernel Port in vSphere [IMPORTANT ITEM #2!]

You will need to create a new VMKernel port. This was the first gotcha for me. It took me at least two hours over the course of several days to figure out these steps. I hope this saves you some time.

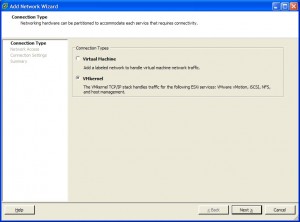

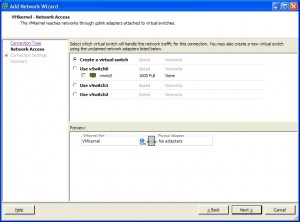

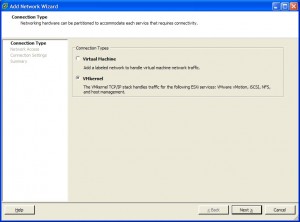

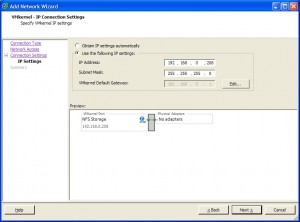

1. Go to Configuration -> Networking -> Add Networking. Select VMkernel as the connection type.

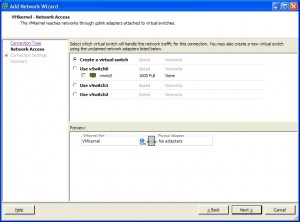

2. Create a virtual switch.

3. Name the label NFS Storage.

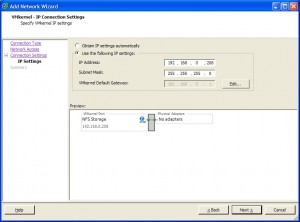

4. Give the port a valid, unused, IP address within your domain.

5. The new VMkernel port will now show for you. You still have one more task to get it to work properly.

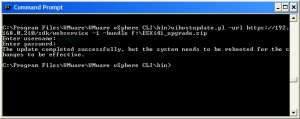

Go back into PuTTY, and log on to the Tech Support Mode.

1. Type ‘esxcfg-vswitch -A VMkernel vSwitch2‘. vSwitch2 is the switch that was just created.

2. Type ‘esxcfg-vmknic -a -i 192.168.0.208 -n 255.255.255.0 VMkernel‘. 192.168.0.208 is the IP used in the previous step.

3. Type ‘esxcfg-route 192.168.0.1‘. This is your network gateway.

Now you are ready to add the datastore.

Configuring ESXi to mount the NFS share as datastore

Next up was configuring the VMWare server to see the NFS share as a new datastore.

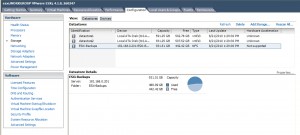

1. Open vSphere client.

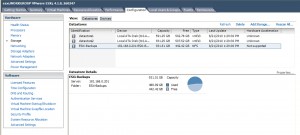

2. Select the Configuration tab, then Storage.

3. Add Storage.

4. Select Network File System.

5. Enter the server name (or IP address- I used the IP address), the folder name you shared off, and the new datastore name.

6. Click Next, then OK.

That is it for installing and setting up the NFS server and shared folder. My next post will describe setting up ghettoVCB.sh for automated virtual machine backups.