Multiple vCPU encoding performance in ESXi 4.1

Background

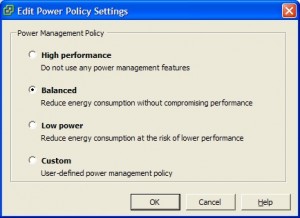

This is a continuation of my post about vSphere 4.1’s new Power Policy settings. This post will look more closely at how adding additional vCPUs to an encoding process can impact its performance, and whether the power settings make that big a difference on a more heavily-loaded system.

An excellent document on the vSphere cpu scheduler performance can be found on the VMWare site. Another one, Determining if multiple virtual CPUs are causing performance issues, is worth a read as well.

Power Policy Settings

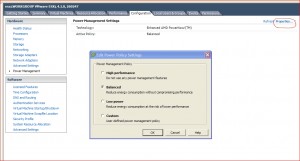

The Power Policy settings can be reached from the VSphere client;Â Configuration -> Power Management -> Properties.

Testing Performance

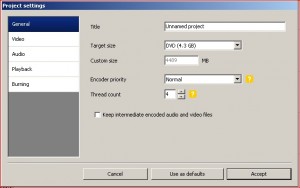

I used nineteen AVI files of my children playing soccer to feed DVDFlick, and created an AVI file as output. The input files were on the OpenFiler virtual appliance, and the output went to a partition on another local drive. This was to eliminate disk contention from the equations. I set DVDFlick’s encoder priority to Normal, and the tests were run on a Windows 2003 Server virtual machine. DVDFlick will effectively use any amount of CPU cores made available to it, so I ran the test using one through four vCPUs. DVDFlick also allows you to select how many threads to run with, so that was varied during the run as well.

I originally did not plan to not test with more than two vCPUs as my server has only four cores, but decided to give it a go while everything was set up. I would not normally allocate more than two vCPUs to any active vm on my VMWare box as it would negatively affect the other active vms. But this was a test, so all four were included in the results.

I ran each test twice and averaged the two runs until it was apparent that each set varied by no more than a few seconds of each other. At that point, I decided only one run per set was needed. The results gave me some nice data for comparing the three settings.

The Power Draw column indicates the average draw during the encoding phase. It is provided as a relative value for comparisons.

| High Performance setting |

|||||

| vCPU Count | Power Draw (Watts) | Time (1 thread) |

Time (2 threads) |

Time (3 threads) |

Time (4 threads) |

| 1 | 114 | 29:09 | 29:35 | ||

| 2 | 124 | 23:11 | |||

| 3 | 123 | 23:39 | 20:57 | 22:21 | |

| 4 | 125 | 24:32 | 22:12 | 20:37 | |

| Balanced setting |

|||||

| vCPU Count | Power Draw (Watts) | Time (1 thread) |

Time (2 threads) |

Time (3 threads) |

Time (4 threads) |

| 1 | 112 | 29:20 | 29:50 | ||

| 2 | 122 | 23:29 | |||

| 3 | 124 | 24:28 | 21:42 | 22:53 | |

| 4 | 127 | 24:54 | 22:01 | 20:54 | |

| Lower Power setting |

|||||

| vCPU Count | Power Draw (Watts) | Time (1 thread) |

Time (2 threads) |

Time (3 threads) |

Time (4 threads) |

| 1 | 113 | 30:09 | 30:41 | ||

| 2 | 123 | 26:20 | |||

| 3 | 121 | 27:51 | 24:52 | 26:20 | |

| 4 | 125 | 28:01 | 26:00 | 23:53 | |

Initial observations

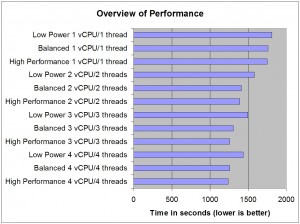

The power usage varied little between the three options when the test was running. The charts also show that going from one to two vCPUs to the virtual machine increased the total by just 10W in each case, and each subsequent vCPU only a couple more watts.

Anytime more threads were used than CPUs allocated, performance suffered. This is not a big surprise, and even DVDFlick cautions against this practice. Performance suffered equally when you went the other direction; running with fewer threads than cores. Obviously, you would want to run with the same number of threads as cores.

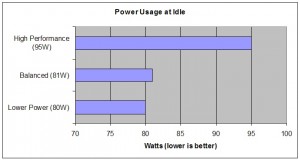

Power usage was effectively the same across all the settings when encoding. I would have expected ratios more resembling the idle stats, but that is why I ran these tests.

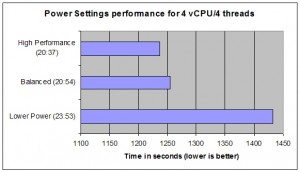

Encoding times for the Balanced setting were very close to that of High Performance, most within 1%. VMWare states the Balanced setting “reduces energy consumption without sacrificing performance.” From my tests, this statement is 100% true.

The Lower Power encoding times were quite a bit slower than the other two settings. Four cores/four threads took three minutes longer (12.5%) to complete than the other two settings, but this was what VMWare cautions would occur. These results suggest there is no compelling reason to ever use this setting. There was almost no difference in power usage but imparted a huge performance penalty.

Four threads/four vCPUs was a third faster than one/one on Balanced and High Performance settings.

Comparisons to my Desktop

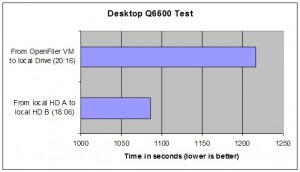

While in the mood to test, I ran two benchmarks on my desktop. That machine runs XP on a Q6600 Quad-core with 4GB installed. I rebooted the machine first and didn’t open anything additional post-boot. The same test bed was used as above.

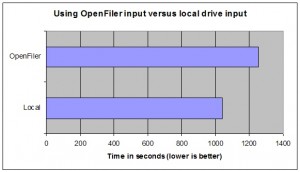

The first benchmark, from OpenFiler virtual machine to a local drive, was almost exactly the same time as through the Server 2003 vm using either Balanced and Performance settings. I was very pleased, until I saw the results from the second test. Local drive A to local drive B was quite a bit faster on the desktop. The 2003 vm should have been doing almost the same thing. Now I needed to test the vm using input and output partitions on two separate physical drives in the ESXi box, and skip OpenFiler altogether. By eliminating OF from the equation, we’ll see if that was the bottleneck.

Removing OpenFiler as input source

I changed the drives to be on 10GB partitions within the two local drives; a Western Digital WD6400AAKS and a Samsung F3 HD103SJ. I used all four threads on the four vCPU virtual machine. I, again, ran each test twice and averaged the scores. The target drive was swapped for the second set.

| MS Server 2003 VM; 4 vCPU/4 threads Balanced Power setting |

|

| From local 640 to local 1TB | From local 1TB to local 640 |

| 17:22 | 17:43 |

By eliminating OpenFiler, we reduced runtime by 3-1/2 minutes! This was another result I was not expecting. I will have to investigate this further in the future.

Conclusion

Using multiple vCPUs for virtual machines that can take advantage of multiple threads can definitely increase performance. Going from one virtual CPU to two in my tests showed the “biggest bang for the buck”. It bears repeating that there were no other virtual machines contending for resources during my testing. If there had been, the outcome might have been affected.

I have vSphere set to the Balanced Power setting. It reduces my power utilization by 15% during all the time it is idle, and does not sacrifice any performance.

Leave a Reply