AoE (ATA over Ethernet) benchmarks on my home network

This week I stumbled upon a technology that was new to me: AoE, or ATA over Ethernet. This protocol was being hyped as a faster, much cheaper alternative to iSCSCI and NFS. In a nutshell, AoE “is a network protocol, designed for simple, high-performance access of SATA devices over Ethernet”.  I found a short description of a typical setup on Martin Glassborow’s site that demonstrated how quickly AoE could be set up. This sounded very interesting to me and worth investigating further. I was curious to see whether it would be anywhere near local-storage speeds in my set-up.

I hit the Ubuntu documentation for how AoE was set up on a Ubuntu 10.04 virtual machine. I created a small, 10GB test disk with vSphere and attached it to my Ubuntu 10.04 vm. After a reboot, I started up the Disk Utility (System/Administration/Disk Utility),formatted the volume, and then mounted it. I noted the drive letter (sdb), as you will need it in steps 4 and 5. After that I performed the following steps in a command window:

sudo apt-get install vbladesudo ip link set eth0 up- I then tested to make sure it was working:

sudo dd if=/dev/zero of=vblade0 coast=1 be=1M

sudo vblade 1 1 eth0 vblade0 - I exported the storage out:Â

sudo vbladed 0 1 eth0 /dev/sdb - I also edited rc.local to add the above line to startup:

sudo nano /etc/rc.local

Next, I began setting up the client side (Windows XP machine). I downloaded the Starwind Windows AoE Initiator, which required me to register first. This application was installed on the Windows box, and started up. I added a new device, chose the adapter, and selected the drive that was listed. The drive suddenly was recognized by Windows as new and connected. Very cool.

In XP’s Computer Management, I initialized the new drive and formatted as NTFS. It took but a few seconds for the 10GB drive to complete. Everything about this AoE-connected drive made it appear as if it was directly connected to my machine. The whole process I’ve described to this point took a bit less than twenty minutes, not counting the few minutes to register at Starwind. Now I wanted to see if it was as fast as local storage.

Testing

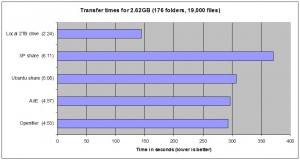

I chose a local folder that was 2.62GB in size, with 176 folders within it containing 19,000 files. This was small enough to not take all night for the tests, but also had enough small files within to make it more challenging that simply a single, large file. I popped up the system clock (with a second hand), and noted the start time when I pasted the file, and stopped the clock when the transfer completed. I did nothing else on my machine while the tests ran. The results are below.

Conclusions

While these results are not overly scientific and very specific to my environment, they do give some valuable comparisons. In my environment, AoE performed no better than an Openfiler or a common Ubuntu (samba) share. It certainly was much slower than local storage but honestly, what isn’t? If AoE had been noticeably faster than OpenFiler, I might have considered finding a use for it. As it stands, it was no faster than any other share on the ESXi box. It did take precious little time and effort to set up and test, so I consider it time well spent. In a large environment, perhaps on a SAN, the results might be different.

Hi,

first of all thank you very much for giving us a reference 🙂 Second in your environment actual network is a limiting factor so throughput initiated by Windows Cache & Memory Manager lazy writer thread should saturate network pretty fast. And it actually did so 🙂 To find out who’s a real deal you should test under heavy multitheaded load with something like Intel I/O Meter and check for IOps rather then MBps. And check average latency (response) time as well.

Thanks!

Anton Kolomyeytsev

CTO, StarWind Software

Thanks, Anton, for the suggestions. I will test with a multi-threaded load when I get a chance. The simple transfer benchmark was valid in my home setting as this share would never be hit by more than one (possibly two) users at a time. Anything requiring more than that would be run using local storage, be it on my primary machine or on the Vmware server with its local storage.

While your measurement of a simple file copy is all good and well, it’s only part of the story.

I would suggest you try testing with IOmeter and start looking not just at straight throuhput of a simple sequential copy operation.

I would suggest you use IOmeter to test 50% random with a 60/40 read/write mix against your various types of shares at a few different block sizes.

When you are talking about SAN workloads, particularly with virtualization…. bandwidth is rarely the performance bottleneck that matters regarding storage performance.

It’s how many IOPs your solution is capable of against a more realistic workload, usually in the real world IOPs are semi-random.

Sequential IOPs like a file copy are an idealistic situation that doesn’t reflect the real world (under such conditions, you should get close to the full throughput of your bottleneck, the disks).

In other words… AoE vs iSCSI vs FC, etc, matter when your metric of storage performance is _latency_ and IOPs.

If you just need simple file copies to run fast in a single sequential workload, that’s really easy, if you just need that — just use what’s cheapest and easiest in your environment.

If you need to pay extra for performance, usually MB/s of a file copy operation is not a relevant metric.

Unless your filesystem is highly fragmented, copying 10000 files is a very predictable workload where readahead cache in your OS may be hiding significant characteristics of your protocol performance 🙂